Methods: Google's AI Co-Scientist System

Technical Specifications

Core System Architecture

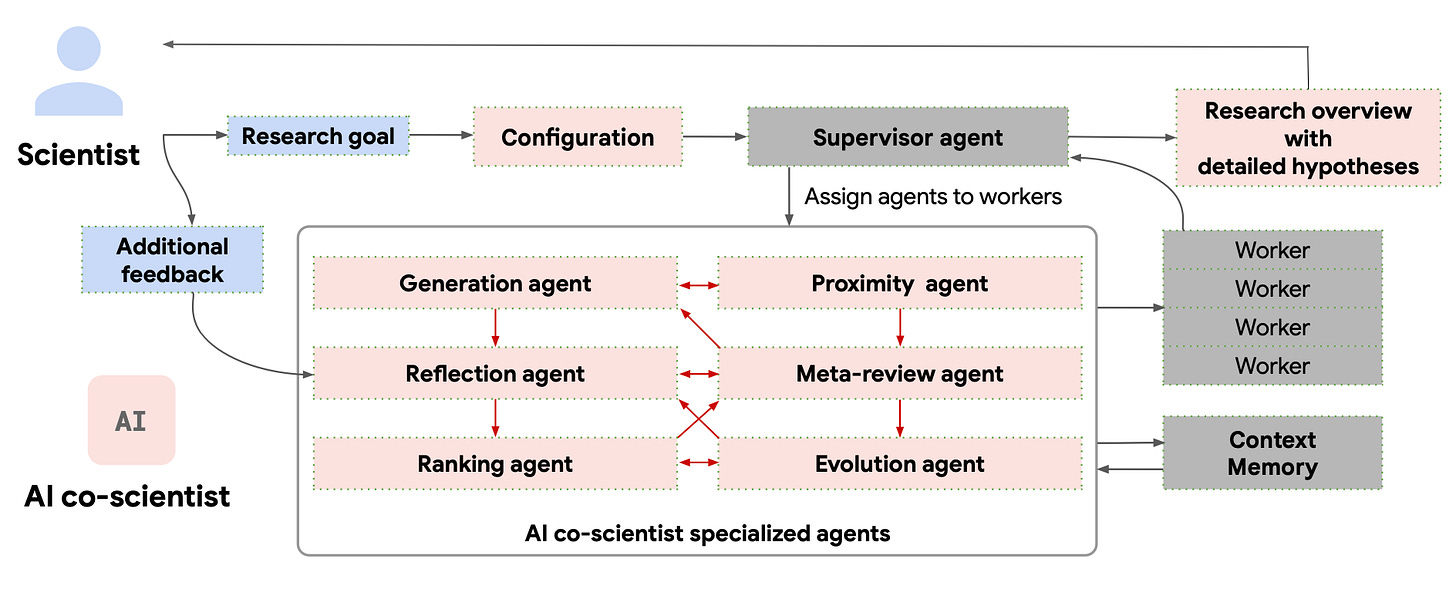

The AI co-scientist employs a multi-agent architecture built on Gemini 2.0, integrated within an asynchronous task execution framework. This architecture is structured around four key components:

Natural Language Interface: Scientists interact with the system primarily through natural language, allowing them to define initial research goals, refine them, provide feedback on generated hypotheses, and guide the system.

Asynchronous Task Framework: The system operates through an asynchronous, continuous, and configurable task execution framework. A dedicated Supervisor agent manages the worker task queue, assigns specialized agents to processes, and allocates computational resources.

Specialized Agents: Scientific reasoning is broken down into sub-tasks executed by specialized agents with customized instruction prompts. These agents function as workers coordinated by the Supervisor.

Context Memory: A persistent context memory stores and retrieves agent states and system information during computation, enabling iterative reasoning over long time horizons.

Agent Implementation Details

Figure 2: The AI co-scientist multi-agent architecture design

Initial Phase

Research Goal Submission: Scientist provides a natural language research goal

Research Plan Configuration: System parses the goal into preferences, attributes, and constraints

Task Initialization: Supervisor agent creates a task queue and allocates resources

Execution Phase

Generation Agent (Literature-Based Hypothesis Creation)

Literature Exploration: Searches and retrieves relevant articles using web search tool (explicit step per A.4.1)

Analytical Reasoning: Processes articles with "chronologically ordered reasoning" (Figure A.24)

Initial Hypothesis Generation: Formulates multiple candidate hypotheses

Scientific Debate: Conducts simulated multi-expert debates to refine hypotheses (Figure A.25)

Reflection Agent (Hypothesis Evaluation)

Initial Review: Assesses correctness, novelty without external tools

Full Review: Uses web search to gather literature support (Figure A.5)

Deep Verification: Decomposes hypothesis into constituent assumptions (Figure A.6)

Observation Review: Determines if hypothesis explains unexplained experimental findings (Figure A.8)

Simulation Review: Simulates the hypothesis in a step-wise fashion to identify potential failure scenarios. Assumes the LLM have a work model that enables them to simulate and predict various scientific phenomena.

Ranking Agent (Tournament Organization)

Tournament Creation: Sets up Elo-based tournament (initial rating: 1200)

Pairwise Comparisons: Conducts head-to-head debates between hypotheses (Figure A.9)

Score Assignment: Updates Elo ratings based on tournament outcomes

Proximity Agent (Calculates similarity between research proposals)

Calculates similarity between research proposals, but it does not participate in hypothesis generation

Builds a proximity graph that takes into account the research goal

Evolution Agent (Hypothesis Refinement)

Grounding: generate search queries, try identifying reasoning gaps.

Feasibility Improvement: Enhances practicality of promising hypotheses (Figure A.29)

Combination & Simplification: Creates new hypotheses from elements of top performers, simplification for testing

Out-of-Box Thinking: Generates divergent alternatives to existing hypotheses (Figure A.30)

Meta-Review Agent (Synthesis)

Key in the feedback loop and self-improvement of the system. Operates on the tournament state, creates a meta-review of hypotheses, scientific debates in the tournament

Pattern Identification: Identifies common strengths/weaknesses across hypotheses (Figure A.10-11)

Provides feedback to the Reflection Agent

Research Overview: Synthesizes findings into comprehensive report (Figure A.12-13)

Research overview is an additional input for Generation Agent

Expert Identification: Suggests qualified domain experts (Figure A.14)

Meta-review output can be formatted for specific settings (e.g. NIH grant proposals)

Integration Components

Context Memory

State Persistence: Stores intermediate outputs, hypothesis rankings, and feedback

Knowledge Sharing: Enables agents to access previous reasoning and conclusions

Restart Capability: Supports system recovery after component failures

Worker Processes

Task Execution: Handles individual agent operations as assigned by Supervisor

Resource Management: Allocates computational resources based on task priority

Progress Reporting: Updates Supervisor on task completion status

Test-Time Compute Scaling Methods

A key methodological innovation is the system's approach to test-time compute scaling:

Tournament Evolution Process: Self-improving hypothesis generation occurs through an Elo-based tournament where hypotheses compete in pairwise comparisons.

Feedback Propagation: The Meta-review agent generates feedback applicable to all agents, which is appended to their prompts in subsequent iterations—enabling continuous learning without backpropagation.

Compute Allocation: The Supervisor agent calculates comprehensive statistics about system state and progress, then strategically weights and samples specialized agents for execution.

Iterative Refinement: Hypotheses undergo multiple rounds of generation, review, ranking, and evolution, with quality improving through increased computational resources.

Self-Play Scientific Debate: Multi-turn simulated debates between expert perspectives allow for nuanced evaluation of competing hypotheses.

Experimental Validation Methodology

The system's validation employed three complementary approaches:

Automated Evaluation

Concordance Analysis: Measured correlation between auto-evaluated Elo ratings and accuracy on the GPQA benchmark dataset

Test-Time Compute Scaling: Tracked improvements in auto-evaluated Elo ratings with increased computational resources across 203 distinct research goals

Baseline Comparison: Compared performance against Gemini 2.0 Pro Experimental, Gemini 2.0 Flash Thinking Experimental, OpenAI o1, OpenAI o3-mini-high, DeepSeek R1, and expert "best guess" solutions

Expert Evaluation

Expert Panel: Conducted evaluations with domain experts who assessed outputs on novelty, impact, and overall preference

NIH-Style Grant Proposal Format: Generated drug repurposing proposals in NIH Specific Aims Page format, evaluated by six expert hematologists and oncologists

Evaluation Rubric: Used a 15-axis evaluation covering significance, innovation, rigor, and feasibility

End-to-End Wet-Lab Validation

Drug Repurposing:

Selected candidates through computational biology analysis using DepMap dependency scores

Measured IC50 (half-maximal inhibitory concentration) in AML cell lines

Validated both existing drugs with preclinical evidence and novel repurposing candidates

Novel Treatment Target Discovery:

Tested drugs targeting AI-identified epigenetic modifiers in human hepatic organoids

Measured anti-fibrotic activity through fold change of fibroblast activity

Antimicrobial Resistance Mechanism:

Provided co-scientist with background information on cf-PICIs

Compared generated hypothesis with unpublished experimental findings

Safety and Ethical Considerations: The system incorporates several safety mechanisms:

Initial Research Goal Safety Review: Automatically evaluates and rejects potentially unsafe research goals

Hypothesis Safety Review: Excludes potentially unsafe hypotheses from the tournament

Continuous Monitoring: Meta-review agent provides an overview of research directions to detect potential safety concerns

Explainability: All components provide detailed reasoning traces for auditing system decisions

Comprehensive Logging: All system activities are logged for future analysis

Adversarial Testing: Preliminary red teaming with 1,200 adversarial research goals across 40 topic areas

Computational Implementation

Base Model: Gemini 2.0 underpins all agents in the system

Model Agnosticism: The co-scientist framework is designed to be model-agnostic and portable to other similar models

Context Window: Leverages the long context capabilities of Gemini 2.0 to process complex research goals and extensive documentation

Tool Integration: Can utilize domain-specific tools like open databases and specialized AI models (e.g., AlphaFold)

Expert-in-the-Loop Design: Scientists can refine goals, provide manual reviews, contribute their own hypotheses, and direct follow-up on specific directions